Most people using AI to build apps are stuck in “prompt chaos.”

Every session starts from scratch.

Every chat forgets your last instruction.

Every output is slightly… off.

But the problem isn’t the model.

It’s how you’re using it.

The real unlock?

It’s something few are talking about yet: Context Engineering.

Coined by Andrej Karpathy, context engineering isn’t just another prompt trick.

It’s a workflow shift—a way to turn LLMs into focused, memory-aware dev assistants that can build full apps, not just snippets.

In this post, I’ll show you the 4-part workflow that real AI builders are using to build full-stack apps—faster, smarter, and without hallucination, repetition, or chaos.

Let’s break it down 👇

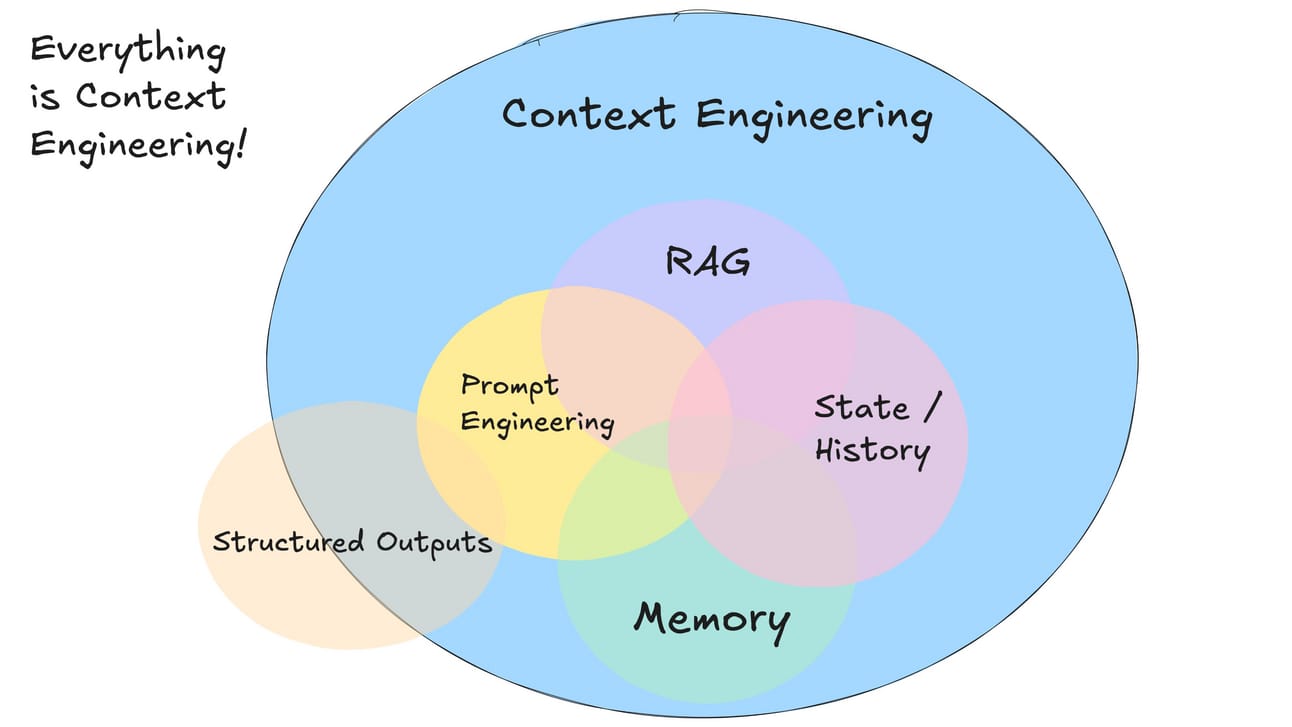

💡 What Is Context Engineering?

Context Engineering is how you structure, feed, and manage information for an LLM to work across multiple steps like a real dev assistant.

It’s not just “prompting smarter.”

It’s creating a memory system—rules, docs, structure, instructions—that the AI can use like a senior engineer uses project documentation.

Karpathy put it perfectly:

“We're now shifting from prompt engineering to context engineering.”

And it’s not just about the prompts. It’s about:

The tools you use (Claude, Cursor, Replit Ghostwriter, etc.)

The files you give the model

How you teach the model to use those files

And when/how you serve information to avoid overloading the context window

🧠 Why This Shift Matters

We’ve moved beyond the “ChatGPT wrapper” era.

Apps like Cursor, Claude Code, and other coding agents are now:

Parsing entire project structures

Running workflows step-by-step

Recalling context from session to session

Acting as intelligent assistants—not just autocomplete bots

Context Engineering turns these tools into production-grade dev agents.

“LLMs don’t just need prompts. They need rules, memory, and scoped context to work like real devs.”

🛠️ The 4-Part Context Engineering Workflow

Let’s walk through the proven method that you can use inside Claude Code or Cursor. No special setup needed—just structured inputs.

Each part builds reusable memory + step-by-step execution:

1️⃣ Start with a Clear PRD (Product Requirements Doc)

Define what you want built. Be explicit about:

Features

Frontend/backend preferences (e.g. Next.js, FastAPI)

Business goals

MVP vs full app scope

"In the PRD, listed that I wanted Next.js on the frontend and FastAPI on the backend."

Even if you don’t know the stack—this step sets up the generation prompt that guides everything else.

2️⃣ Use a “Generate Rule” to Create Context Files

This rule tells the LLM to convert your PRD into 4 essential documents:

✅

implementation.md→ Task-by-task build plan🎨

ui-ux.md→ Layout, flows, frontend UX🧱

structure.md→ Folder/file/project architecture🐛

bugs.md→ Future bugs/issues tracker

“Once all four files are generated, that becomes the complete context.”

Don’t overload the model—just modularize.

This gives the LLM scoped memory, not a dump of random prompts.

3️⃣ Apply a “Workflow Rule” That Teaches the Model How to Use the Files

This is a small file (always in context) that tells the LLM:

Use

implementation.mdto pick tasksUse

ui-ux.mdfor frontend layout or stylingUse

structure.mdbefore generating filesUse

bugs.mdto avoid duplicating issues

“This rule regulates the entire process. Keep it short so it always fits in the context window.”

This is what transforms Claude or Cursor from a smart chatbot into an actual assistant.

4️⃣ Let the Agent Execute — One Task at a Time

From here, Claude or Cursor can begin building:

Backend, frontend, folders, UI—all scaffolded

Tasks pulled from

implementation.mdNew chats can still pick up from the docs

Bugs and retries scoped to your files

“Even if the session resets, the context is in the docs—not the memory.”

This means no re-explaining and no loss of flow.

This solves the problem of context window limits. Instead of stuffing everything into a single prompt, you're feeding the model just what it needs—at the right time.

You can even create custom commands in Claude (e.g., /generate_implementation) and drop in your rules for repeatable scaffolds.

⚠️ Common Mistakes & Pro Tips

More context ≠ better results

“Once the context window fills up, hallucination increases.”

Don’t overload the model. Feed it modular files.

Conflicting instructions break the flow

“Even though I said MVP, the generate file asked for full app scope. So it did both.”

Always read and align your generate file with the PRD.

Don’t rely on AI to pick your tech stack

“Eventually it’s your decision. The model might choose tools you don’t even have access to.”

Do the research yourself.

Claude Code supports multi-agent tasks

For things like UI variation generation, Claude shines. Cursor is stronger at step-by-step workflows.

📦 Cursor vs Claude: What to Use?

Feature | Cursor | Claude Code |

|---|---|---|

Step-by-step execution | ✅ Excellent | ✅ Good |

Task List / PRD linking | ✅ Native | ⚠️ Requires setup |

Multi-agent generation | ⚠️ Limited | ✅ Strong |

Context window size | ⚠️ Smaller | ✅ Larger |

Codebase Documentation | ❌ None | ✅ |

Both support the same workflows. Use what fits your project and context needs.

✅ Final Thoughts

Context Engineering is how we shift from vibe coding to reliable, reproducible software builds with AI.

You don’t need to keep hacking prompts.

You need a system:

🔁 Repeatable

🧱 Modular

🎯 Aligned with your product goals

🧠 Designed for AI workflows, not just human ones

This is how to leverage AI to ship faster, smarter, and with way less cleanup.