At a Glance

Big Idea: AI outputs aren’t bad because the models are weak. They’re bad because we ship first drafts.

Why It Matters: As AI moves from “assistant” to “builder,” silent errors scale faster than teams can catch them. Without feedback loops, confident but wrong outputs will reach production, across code, specs, and strategy.

The Big Idea: AI Quality Is a System, Not a Prompt

A few weeks ago, I used AI to generate a Product Requirements Doc (PRD) for a new internal feature.

The doc looked excellent:

Clear goals

Logical user flows

Well-written requirements

Confident timelines

If you skimmed it, you’d approve it.

But when engineers and stakeholders reviewed it, the issues surfaced:

Critical edge cases were missing

System dependencies were assumed, not validated

Security and compliance weren’t mentioned

Non-functional requirements were completely absent

Nothing was obviously wrong.

Which is exactly what made it dangerous.

That’s when it clicked for me:

AI quality isn’t about better prompts.

It’s about better systems.

The Real Failure Mode: One-Shot AI

“One-shot AI” works when the cost of being wrong is low.

Emails. Brainstorming. Rough drafts.

But the moment you use AI for:

production code

technical documentation

API contracts

product strategy

…the first draft becomes the most dangerous one.

In real systems, errors don’t fail loudly.

They fail quietly.

And AI is very good at sounding confident while being wrong.

That’s why better prompts alone won’t save you.

There’s a deeper risk here that most teams miss.

AI systems don’t usually fail with obvious errors.

They degrade quietly through small assumptions, missing constraints, and unchecked outputs that compound over time.

In production AI systems, this is known as drift.

In everyday AI usage, it looks like this:

Specs that slowly diverge from reality

Docs that feel right but mislead

Strategies built on assumptions no one validated

The danger isn’t bad output.

It’s unchecked output becoming the foundation for the next decision.

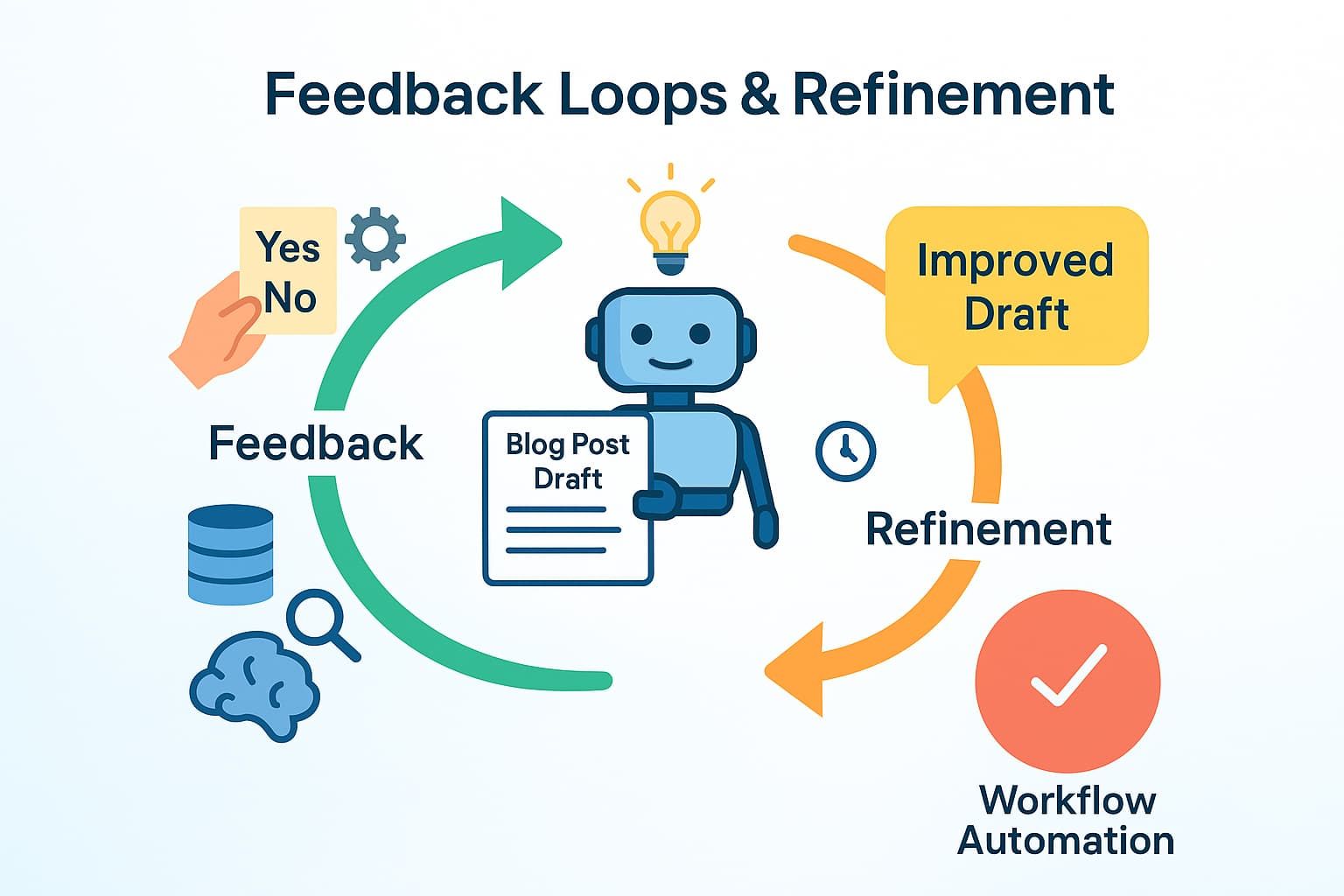

The Shift: From Prompting to Feedback Loops

The best teams aren’t prompting harder.

They’re running a simple loop:

Generate → Critique → Refine

This loop turns AI from a content generator into a thinking partner.

How the Quality Loop Works

1. Generate (with constraints)

Don’t ask for a generic doc.

Ask for structure and boundaries.

Draft a PRD for {feature} including:

- goals

- user stories

- success metrics

- rollout plan

- known constraints

Constraints reduce hallucinations before they start.

2. Self-Critique (the missing step)

Now ask the AI to evaluate its own work:

Review this PRD and identify:

- missing edge cases

- unrealistic assumptions

- unclear ownership or dependencies

- risks that could delay delivery or impact users

This forces the model out of creation mode and into evaluation mode.

That mode switch is where quality jumps.

This works because generation and evaluation are fundamentally different tasks.

When you ask an AI to “create,” it optimizes for fluency and completion.

When you ask it to “review,” it optimizes for gaps, risks, and failure modes.

Most quality issues disappear not because the model got smarter,

but because you forced it into the right mode.

3. Refine (based on feedback)

Finally, feed the critique back in:

“Revise the PRD to address these issues.

Optimize for engineering feasibility and operational reality.”

This is where “looks good” turns into “actually usable.”

Real-World Impact

After rebuilding our PRDs with this loop:

Hidden assumptions surfaced early

Engineering risks became explicit decisions

Review cycles dropped from weeks to days

Fewer last-minute scope changes during delivery

Same AI.

Same people.

Different system.

Prompt Templates: The AI Quality Loop

Product & Strategy Docs

Generate

Draft {document type} for {feature} with {explicit sections}

Critique

Identify gaps, risks, missing assumptions, and dependencies

Refine

Revise to address issues with realistic constraints

Technical Writing

Generate

Draft documentation for {feature} targeting {audience}

Critique

Identify unclear steps, missing prerequisites, or assumptions

Refine

Improve clarity and completeness

Planning & Decision-Making

Generate

Create a plan for {initiative}

Critique

Identify unrealistic timelines, resource gaps, and risks

Refine

Adjust based on constraints and tradeoffs

Same loop.

Every domain.

A special offer from our sponsor. Click below for more details!

The Future of Shopping? AI + Actual Humans.

AI has changed how consumers shop by speeding up research. But one thing hasn’t changed: shoppers still trust people more than AI.

Levanta’s new Affiliate 3.0 Consumer Report reveals a major shift in how shoppers blend AI tools with human influence. Consumers use AI to explore options, but when it comes time to buy, they still turn to creators, communities, and real experiences to validate their decisions.

The data shows:

Only 10% of shoppers buy through AI-recommended links

87% discover products through creators, blogs, or communities they trust

Human sources like reviews and creators rank higher in trust than AI recommendations

The most effective brands are combining AI discovery with authentic human influence to drive measurable conversions.

Affiliate marketing isn’t being replaced by AI, it’s being amplified by it.

The Hidden Benefit Most People Miss

As AI-generated content becomes the default input for more systems,

docs feeding plans, plans feeding builds, builds feeding decisions

unchecked outputs start reinforcing each other.

This is how quality collapses:

not in one dramatic failure,

but through a thousand “looks good to me” approvals.

Feedback loops aren’t about perfection.

They’re about preventing silent degradation at scale.

Before you go

AI is very good at producing answers.

It’s terrible at knowing when those answers are safe to ship.

That gap is your responsibility.

The difference between low-leverage AI and high-leverage AI isn’t intelligence.

It’s process.

The best teams don’t trust AI less,

they just verify it more.

So if there’s one rule to take away from this:

Never ship a first draft, especially when AI wrote it.

That principle will outlast every model release.